I’ve done it. again.

It seems that only yesterday I moved from blogspot to wordpress.com, and now I’m moving again. Yes! I finally have my own domain, which is quite exciting!! The new url if you haven’t noticed yet:

All RSS followers, please update feed URL. Actually if you read this from your RSS client, it means that you don’t have to update it coz’ I write this post on my new domain, but one year from now the DNS forwarding won’t work, keep that in mind.

The old domain’s feed isn’t working with the new domain, so please do update. The new feed URL: http://frishit.com/feed/

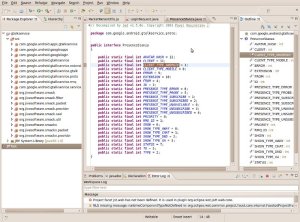

Setting up XMPP BOSH server

This tutorial explains how to setup/troubleshoot XMPP server with BOSH. I’m not getting into what is XMPP and what is it good for. The first two paragraphs are theoretical. XMPP is stateful protocol in a client-server model. If web application needs to work with XMPP a few problems arise. Modern browsers don’t support XMPP natively, so all XMPP traffic must be handled by program running inside the browser (JavaScript/Flash etc…). The first problem is that HTTP is a stateless protocol, meaning each HTTP request isn’t related with any other request. However this problem can be addressed by applicative means for example by using cookies/post data.

The second problem is the unidirectional nature of HTTP: only the client sends requests and the server can only respond. The server’s inability to push data makes it unnatural to implement XMPP over HTTP. The problem is eliminated if client program can make direct TCP requests (thus eliminating the need of HTTP). However, if we want to address the problem within HTTP domain (for example because Javascript can only forge HTTP requests) there are two possible solutions, both require “middleware” to bridge between HTTP and XMPP. The solutions are “polling” (repeatedly sending HTTP requests asking “is there new data for me”) and “long polling”, aka BOSH. The idea behind BOSH is exploitation of the fact that the server doesn’t have to respond as soon as he gets request. The response is delayed until the server has data for the client and then it is sent as response. As soon as the client gets it he makes a new request (even if he has nothing to send) and so forth.

BOSH is much more efficient, from server load’s point of view and traffic-wise. In this tutorial I set up Openfire XMPP server (which also provides the BOSH functionality) with JSJaC library as client, using Apache as web server on Ubuntu 10.04. Openfire has Debian package and as such, installation is fairly easy. Just download the package and install. After installation browse to port 9090 on the machine it was installed on, and from there it’s web-driven easy setup. If you choose to use MySQL as Openfire’s DB make sure to create dedicated database before (mysqladmin create).

After initial setup, I wasn’t able to login with the “admin” user. This post solved my problem, openfire.xml is located at /etc/openfire (if you installed from package), you will need root privileges to edit it and then restart Openfire (sudo /etc/init.d/openfire restart). Other than that everything worked fine. Openfire server (as well as all major XMPP servers) provides the BOSH functionality, aka “HTTP Binding” aka “Connection Manager”. By default it listens on port 7070, with “/http-bind/” (the trailing slash is important).

To make sure it works (this is the part I couldn’t find anywhere, that’s why it took me long time to resolve all problems) I used “curl”, very handy tool (sudo apt-get install curl). To test the “BOSH server”:

# curl -d “<body rid=’123456′ xmlns:xmpp=’urn:xmpp:xbosh’ />” http://localhost:7070/http-bind/

Switch “localhost” with your server name, notice the trailing slash. Expected result should look like:

<body xmlns="http://jabber.org/protocol/httpbind" xmlns:stream="http://etherx.jabber.org/streams" authid="2b10da3b" sid="2b10da3b" secure="true" requests="2" inactivity="30" polling="5" wait="60"><stream:features><mechanisms xmlns="urn:ietf:params:xml:ns:xmpp-sasl"><mechanism>DIGEST-MD5</mechanism><mechanism>PLAIN</mechanism><mechanism>ANONYMOUS</mechanism><mechanism>CRAM-MD5</mechanism></mechanisms><compression xmlns="http://jabber.org/features/compress"><method>zlib</method></compression><bind xmlns="urn:ietf:params:xml:ns:xmpp-bind"/><session xmlns="urn:ietf:params:xml:ns:xmpp-session"/></stream:features></body>

Once verified, we can continue with the next step. Since the client is Javascript based, all Javascript restrictions enforced by the browser applies. One of these restrictions is “same origin policy“. It means that Javascript can only send HTTP requests to the domain (and port) it was loaded from and since it is served on HTTP (port 80) it can’t make requests to port 7070. Solution: Javascript client will make requests to the same domain and port. The requests will be forwarded locally to port 7070 by Apache. I guess you can use the same method to forward even to a different server but I didn’t try. I configured forwarding following this post but there is probably more than one way to do it.

Add to /etc/apache2/httpd.conf the following lines (root privileges needed):

LoadModule proxy_module /usr/lib/apache2/modules/mod_proxy.so

LoadModule proxy_http_module /usr/lib/apache2/modules/mod_proxy_http.so

Then, add to /etc/apache2/apache2.conf the following lines (root privileges needed):

ProxyRequests Off

ProxyPass /http-bind http://localhost:7070/http-bind/

ProxyPassReverse /http-bind http://localhost:7070/http-bind/

ProxyPass /http-binds http://localhost:7443/http-bind/

ProxyPassReverse /http-binds http://localhost:7443/http-bind/

Now restart the Apache (sudo /etc/init.d/apache2 restart) and make sure it starts properly. To verify the forwarding works, use the same curl method, this time as request to the Apache:

# curl -d “<body rid=’123456′ xmlns:xmpp=’urn:xmpp:xbosh’ />” http://localhost/http-bind

The result should be the same as before. If it doesn’t work there is problem with the forwarding. Once it is working, server side is ready. On the client (in my case JSJaC), you should specify to use BOSH/HTTP Bind “backend” (as opposed to “polling”). For “http bind” url just use “/http-bind” and everything should work. Notice that if you open the client locally on your desktop (not served by the Apache) it won’t work because of the “same origin policy” mentioned before.

I hope you find this tutorial useful, it sure could have helped me… 🙂

iPhone tethering with Ubuntu

Today was my first time I used iPhone tethering. Tethering means connecting computer to the internet using iPhone’s cellular connectivity (3G/EDGE). The iPhone connects to the computer via USB cable or Bluetooth and uses as a cellular modem. It’s useful when you’re away with a laptop and no wireless hot spots around.

Today was my first time I used iPhone tethering. Tethering means connecting computer to the internet using iPhone’s cellular connectivity (3G/EDGE). The iPhone connects to the computer via USB cable or Bluetooth and uses as a cellular modem. It’s useful when you’re away with a laptop and no wireless hot spots around.

There are many tutorials out there, however I found most of them misleading (advising to use third party software or jailbreak your iPhone – both unnecessary) and none of them for Linux. This tutorial is really easy and simple.

Step 1 – Enable internet sharing on your iPhone

On your iPhone goto Settings -> General -> Network -> Internet sharing -> On. If “Internet sharing” isn’t shown, open Safari and browse to: http://help.benm.at. On this webpage click on “Tethering”, then select your country and your mobile carrier. Accept the profile and reboot your iPhone. After the reboot “Internet sharing” option is supposed to be shown. In my case, my carrier wasn’t on the list so I generated a custom file (the last option). The APN/User/Password were easily found using Google. However, “Internet sharing” stayed hidden. The funny thing is after I removed the custom profile it magically appeared. My iPhone version is 3.1.2 and it worked. I noticed they have warning for 3.1.3 so watch out if you got it.

Step 2 – Install necessary software on Ubuntu

As always, I provide instructions for Ubuntu. The driver you need is called “ipheth” and it depends on “libimobiledevice”. Now, you can find them both on Lucid’s official repositories. However, only version 0.9.7 of libimobiledevice is there, and while it may be enough for tethering, I recommend using the newer version which can be obtained from Paul McEnery PPA (for Karmic it’s still version 0.9.7):

# sudo add-apt-repository ppa:pmcenery/ppa

Executing: gpg –ignore-time-conflict –no-options –no-default-keyring –secret-keyring /etc/apt/secring.gpg –trustdb-name /etc/apt/trustdb.gpg –keyring /etc/apt/trusted.gpg –primary-keyring /etc/apt/trusted.gpg –keyserver keyserver.ubuntu.com –recv 3AE22276BF4F39C8D6117D7F4EA3A911D48B8E25

gpg: requesting key D48B8E25 from hkp server keyserver.ubuntu.comgpg: key D48B8E25: public key “Launchpad PPA for Paul McEnery” imported

gpg: Total number processed: 1

gpg: imported: 1 (RSA: 1)

# sudo apt-get update

Now, install the driver (and it’s dependencies, which include the library):

# sudo apt-get install ipheth-utils

Load the driver into running kernel

# sudo modprobe ipheth

At this point if you enter “dmesg” the last line should show something like “usbcore: registered new interface driver ipheth”

Step 3 – Plug your iPhone using USB cable

As soon as you connect your device a new interface should be plumbed, configured and ready to be used (in case you use network manager). You can get list of your interfaces with “ifconfig -a”. On your iPhone there suppose to be label saying “Internet Tethering”. If you don’t use network manager the “Internet Tethering” is triggered when you make DHCP request on that interface (for example, using “dhclient”).

As soon as you connect your device a new interface should be plumbed, configured and ready to be used (in case you use network manager). You can get list of your interfaces with “ifconfig -a”. On your iPhone there suppose to be label saying “Internet Tethering”. If you don’t use network manager the “Internet Tethering” is triggered when you make DHCP request on that interface (for example, using “dhclient”).

That’s it. As promised, easy and simple. Thanks to Paul McEnery there are plenty of other cool iPhone related stuff you can install from his PPA (on Lucid).

Enjoy!

JeOS on VirtualBox

I always needed test environment for my destructive experiments. Sandbox, if you want. A place I can do whatever I want without worrying about the consequences. I’m tired of destroying my operating system. I’m talking about these times when I modify and rebuild kernel modules/kernels/libraries for experimental purposes, such as making KVM support Mac OS X, making netfilter/iptables module support advanced connection tracking, etc. This time, I needed to patch rtl8178 kernel module (wireless driver) to allow packet injection. I decided to solve the problem once and for all.

The problem, if you didn’t follow, is that these kind of changes might have destructive influence on the running operating system. There are basically two different approaches to address this problem:

1. Take snapshot, or “point in time” of the operating system and after experiment is finished return to that point.

2. Experiment in a “sandbox” where no one cares what gets ruined and it can’t harm the operating system.

I prefer the second approach because when you roll back to certain point in time everything rolls back and I’m always doing more than one thing so I don’t want my other stuff to roll back as well. Besides, that kind of solution is always “heavy” as the whole system needs to be compared to the way it was before. Sure, you can make use of timestamps and more sophisticated comparisons to make it quicker but unless you use real snapshots (such as in LVM or ZFS), it’s not ideal.

So we go with the sandbox approach. Once again we have a few options. We can create chroot jail but it might have problems accessing physical devices (I never tried actually) and I don’t really like the way the chrooted environment is created. We can use “live” operating system that runs directly from real memory (no changes are made to disk) but then only one operating system can run simultaneously which means that during the potentially destructive session my normal operating system won’t be available, which is exactly when I might need it.

Virtual machine seems like an adequate solution. It fulfills the sandbox demand, it’s easy to setup and some virtualization platforms even got snapshot abilities. The only problem is it doesn’t always have access to physical devices. However, in this case the device is usb based and therefor accessible from within the virtual machine as well. For virtualization platform I chose VirtualBox (3.1.8). Vmware doesn’t support my processor’s virtualization capabilities so it’s out of the game and VirtualBox performs better than KVM, on my computer at least. Especially when it comes to I/O performance. Now I need to choose adequate operating system for the virtual environment.

I want the operating system to be as minimal as possible, no need for fancy graphical environments, office suite, web browsers, etc… I started looking for candidates but man, there are so many distros out there! I would have simply used Ubuntu because I’m used to it, it has huge software repositories and it would be very similar to my actual operating system, the only problem is it comes with loads of unnecessary software. I considered installing Debian exactly for that reason and then I found out about Ubuntu’s JeOS (thanks Amir). JeOS stands for just enough operating system and it’s simply Ubuntu’s core. It seemed perfect for my needs. JeOS comes with Ubuntu server edition.

The installation is fairly simple and quite fast. On the first installation screen you need to press F4 and select “Install a minimal system” as shown. There is also a Vmware/KVM optimizied version (“Install a minimal virtual machine”) but it’s not VirtualBox optimized so I chose the minimal server option. The rest of the installation is following simple screens. When I had the option to choose software packages I chose the basic Ubuntu Server and OpenSSH.

The installation is fairly simple and quite fast. On the first installation screen you need to press F4 and select “Install a minimal system” as shown. There is also a Vmware/KVM optimizied version (“Install a minimal virtual machine”) but it’s not VirtualBox optimized so I chose the minimal server option. The rest of the installation is following simple screens. When I had the option to choose software packages I chose the basic Ubuntu Server and OpenSSH.

Boot time is also impressive, leaving us with old-school tty login screen. Cool. Next thing is to install VirtualBox guest additions to make interaction smoother and better. Before it is installed few prerequisites has to be installed first:

# sudo apt-get install gcc xserver-xorg-core

It will install gcc and X.Org, the graphical environment server. It won’t install window manager (such as Gnome or KDE), graphical login (GDM or KDM), etc… just the core X server. To install VirtualBox Guest Additions, from the virtual machine menu: Devices -> Install Guest Additions. Then:

# sudo mkdir /media/cdrom

# sudo mount -o ro /dev/sr0 /media/cdrom

# sudo /media/cdrom/VBoxLinuxAdditions-amd64.run (depends on your platform)

Make sure you get no errors and viola! our new test environment is ready. I took a snapshot (Machine -> Take Snapshot) so I can always return to this basic point. What if you do want lightweight window manager ? I used FluxBox but you can install whatever you like. To install fluxbox:

Make sure you get no errors and viola! our new test environment is ready. I took a snapshot (Machine -> Take Snapshot) so I can always return to this basic point. What if you do want lightweight window manager ? I used FluxBox but you can install whatever you like. To install fluxbox:

# sudo apt-get install fluxbox

# sudo apt-get install xinit x11-utils eterm xterm

# echo fluxbox > ~/.xinitrc

The packages eterm, x11-utils allows you to set FluxBox background with “fbsetbg” command. xterm is the standard terminal emulator (I guess one may argue with that but it’s my favorite anyway). None of them is necessary. To load the graphical environment:

# startx

That’s it. I also managed to recompile rtl8187 kernel module but it’s out of the scope of this post. Enjoy your new test environment!

Remote support and process manipulation

Sometimes I help friends that make their first steps in Linux world/shell scripting/etc. I’ve found that the best way to give remote technical support is over a “shared” terminal window. It can be done with screen commnad (short tutorial here) or kibitz which is part of the standard expect package. The basic operation is similar: the side that needs to be controlled creates the “shared” terminal, the other side first connects to the same machine (telnet/ssh) and then “attaches” to the shared terminal.

This scenario, although reasonable has couple of limitations. First, to support someone, a new shared environment has to be established. You can’t attach to existing terminal window (unless inside “screen” already) which is inconvenient. Second, a shell access is needed before attaching to the shared terminal (unnecessary privilege) and third, you can’t connect to the shared terminal (as server) which could have been really usable for NAT/Firewall bypassing via reverse ssh tunneling.

This scenario, although reasonable has couple of limitations. First, to support someone, a new shared environment has to be established. You can’t attach to existing terminal window (unless inside “screen” already) which is inconvenient. Second, a shell access is needed before attaching to the shared terminal (unnecessary privilege) and third, you can’t connect to the shared terminal (as server) which could have been really usable for NAT/Firewall bypassing via reverse ssh tunneling.

How can we address those problems? We need a small program that can duplicate existing process’ file descriptors (stdin/stdout/stderr) and bind them to either another terminal (pty device) or network sockets. I couldn’t find anything that does exactly this but I found another cool stuff that does similar/related things that I’d like to share. I think I’m gonna write my own tool, based on the stuff I found (long live open source!) but ’till then, you can check these out:

Output redirection of running process using gdb. This method uses gdb‘s ability to attach to already running process, freeze it’s normal execution, run arbitrary code and continue. In this particular method stdout is closed and reassigned to another file. Pretty neat! Here, the same method is used but instead of closing stdout, it is duplicated to a new file descriptor.

Retty is a “tiny tool that lets you attach processes running on other terminals”, which means you can reattach to any open terminal window (for example text editor, mail client etc…) from any window. The original session would be destroyed though. Unfortunately, retty can’t run on amd64 platforms (like mine) because it injects i386 assembly instructions into running processes. Retty’s functionality can be achieved, again, using gdb method with this script.

Neercs is very similar to screen but has unique features such as grabbing a process that wasn’t initially started inside it, different window layouts etc. It is based on libcaca, so when I built it I had to manually get latest version of libcaca, build it, and then build neercs against it (if anyone need help with this just leave a comment). Neercs uses similar grabbing mechanism to retty’s, but they made both i386 and x86_64 assembly. From my tests it’s little less responsive than screen and it has problems passing F keys and Alt-* keystrokes.

Another cool program is CryoPID – process freezer for Linux. It captures the state of a running process and saves it to a file. The process can be resumed later even on another machine. Unfortunately, I couldn’t get it compiled on Ubuntu 9.10 and there is no Launchpad package as well 😦

That’s all. If anyone has better solutions I’ll be glad to hear them.

Sending mail from command line

Recently I wanted to add mail sending functionality to one of my scripts. This script runs on my desktop computer, so no fancy company mail servers/fixed IP/DNS records for me. When I googled it up I saw many different methods in varying complexity. My need was the simplest you can think of- just to send email. I didn’t care if it’s always from the same address. My solution was to use Ubuntu’s default exim4 mail server, with Gmail. Exim authenticates with your gmail user/password and the mail is always sent from the same address (user@gmail.com). This is heavily based on this, although a little different.

First I had to install exim4-config, so:

# sudo apt-get install exim4-config

Then I needed to configure exim to work with Gmail:

# sudo dpkg-reconfigure exim4-config

My selections:

- General type of mail configuration: mail sent by smarthost; no local mail

- System mail name: localhost

- IP-address to listen: 127.0.0.1

- Other destinations for which mail is accepted: (leave blank)

- Visible domain name for local users: localhost

- IP address or host name of the outgoing smarthost: smtp.gmail.com::587

- Keep number of DNS-queries minimal: no

- Split configuration into small files: no

- Root and postmaster mail recipient: (leave blank)

Edit /etc/exim4/passwd.client (you can use gedit if you’re not comfortable with vi):

# sudo vi /etc/exim4/passwd.client

Add those lines (replace “user” and “password” with your own):

gmail-smtp.l.google.com:user@gmail.com:password

*.google.com:user@gmail.com:password

smtp.gmail.com:user@gmail.com:password

Finally update (refresh) exim configuration:

# sudo update-exim4.conf

That’s about it. To send the contents of /etc/motd as mail (just example):

# cat /etc/motd | mail -a “FROM: user@gmail.com” -a “BCC: somemail@somedomain.com” -s “This is the subject” recipient@somedomain.com

The “BCC:” is optional of course. If you don’t specify “FROM:” the default is the current user.

If you don’t have the “mail” command then just install mailutils:

# sudo apt-get install mailutils

Happy mailing!

USB Multibooting

I recently wanted to add multiboot support to my usb disk-on-key. I mainly use it for casual file transfers/play music on my car, but I also got BackTrack installed on it. From time to time I get to use it on a frined’s computer. BackTrack is one of many “live cd” operating systems, meaning you can boot it directly from cd drive/usb thumbdrive without affecting your hard disk. When you finish you just eject the cd/thumbdrive, reboot and everything would go back to normal as if nothing happened.

Live operating systems are very useful in many cases, usually when you want to perform some operations that you can’t or don’t want to do within your normal operating system, such as virus cleaning (if you’re infected and the virus killed your antivirus), hard disk backups, computer forensics, security assessment, files access, resetting your password, hardware problems diagnosis, checking if your hardware is supported by new operating system, etc…

Multibooting means to have the ability to boot more than operating systems. Unfortunately, most “live” operating system makers just provide you with image file (iso) you can burn to cd/dvd. At most, they give instructions how to install to usb thumbdrive, instructions that usually involve formatting it and even if not, you would still be able to boot only the last installed operating system (if you install more than one).

The method I’m going to present is inspired by pendrivelinux.com guide. They made windows utility to get the job done, but you only get the final result without understanding how it works or customizing it. This post is a step by step guide to make multiboot-able usb thumbdrive from scratch using Linux, plus you get to understand how it works, plus customize it, plus you don’t have to use physical drive as the whole thing can be emulated (very useful for testing). If you do it on physical drive make sure to backup your data before !!! Everything worked out of the box with my Ubuntu 9.10. If you have problems with other Linux distros post as comment and I’d try to help.

The method I’m going to present is inspired by pendrivelinux.com guide. They made windows utility to get the job done, but you only get the final result without understanding how it works or customizing it. This post is a step by step guide to make multiboot-able usb thumbdrive from scratch using Linux, plus you get to understand how it works, plus customize it, plus you don’t have to use physical drive as the whole thing can be emulated (very useful for testing). If you do it on physical drive make sure to backup your data before !!! Everything worked out of the box with my Ubuntu 9.10. If you have problems with other Linux distros post as comment and I’d try to help.

We would use grub4dos as bootloader. Download it from here. For this tutorial I’m going to use emulated usb thumbdrive, with it’s data back-stored as file named “usb.dsk”, so whenever I do something with this file, if you do it with real usb thumbdrive, the corresponding file is the one representing your thumbdrive such as /dev/sdd (you can figure it out with df -h command). Alright, let’s get dirty.

Creating the emulation file (skip if you use physical device)

We just need to create empty file at the size we want. It can be done with “dd” command. dd works by default with blocks of 512 bytes so it explains the following numbers (you can use any size, here are 2GB and 4GB examples):

4GB file creation:

# dd if=/dev/zero count=7892040 of=usb.dsk

2GB file creation:

# dd if=/dev/zero count=4029440 of=usb.dsk

Partitioning the emulated/physical device

If you use physical device and you don’t want to repartition/format it, the only requirement is to have FAT partition. You can check it with “fdisk -l” on your device file for example “sudo fdisk -l /dev/sdd”. Otherwise, keep reading this section (don’t forget to replace usb.dsk with your device file whenever specified. You may also need to run everything with sudo).

We need to partition our newly created file:

# fdisk usb.dsk

Ignore “you must set” warnings if you get any. fdisk command needs to know the physical structure of our emulated device, so we must tell it manually (if you use physical device ignore this). As I had no idea what structure I want, I just copied it from existing devices I own. 4GB device: sectors=62, heads=125, cylinders=1018. 2GB device: sectors=63, heads=255, cylinders=250. I think those numbers doesn’t really make any difference.

To tell fdisk the structure (this is for 2GB file but you can change the numbers):

Command (m for help): x

Expert command (m for help): s

Number of sectors (1-63, default 63): 63

Warning: setting sector offset for DOS compatiblityExpert command (m for help): h

Number of heads (1-256, default 255): 255Expert command (m for help): c

Number of cylinders (1-1048576): 250Expert command (m for help): r

Command (m for help):

Now that device structure is set, use “p” to print the current partition table. The output should look like this (disk identifier may change):

Command (m for help): p

Disk usb.dsk: 0 MB, 0 bytes

255 heads, 63 sectors/track, 250 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Disk identifier: 0x1194fc93Device Boot Start End Blocks Id System

Command (m for help):

If any partitions are shown, delete them with “d”. Make sure you don’t need the data already there as it will be deleted! Now we need to create new bootable FAT partition and we’re done. Just follow my commands (once again, numbers are for 2GB, you can use other numbers, as specified before):

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-250, default 1): 1

Last cylinder, +cylinders or +size{K,M,G} (1-250, default 250): 250Command (m for help): t

Selected partition 1

Hex code (type L to list codes): c

Changed system type of partition 1 to c (W95 FAT32 (LBA))Command (m for help): a

Partition number (1-4): 1Command (m for help): w

The partition table has been altered!WARNING: If you have created or modified any DOS 6.x

partitions, please see the fdisk manual page for additional

information.

Syncing disks.

If everything went smooth, you now have one FAT type partition at the size of your device.

Installing GRUB4DOS to Master Boot Record

To install grub4dos just run bootlace.com <device name> from where you extracted the zip archive:

# grub4dos-0.4.4/bootlace.com usb.dsk

Output should look like:

Disk geometry calculated according to the partition table:

Sectors per track = 63, Number of heads = 255

Success.

Formatting the device

If you use physical device skip to the last command in this paragraph (“Creating the FAT filesystem”). To format the partition (create new FAT filesystem on it) we first need to calculate it’s offset in our file. It is located on the second track. The number of sectors per track is what we defined before (2GB: 63, 4GB: 62) and number of bytes per sector is 512, meaning we need to skip 512 x 63 = 32256 bytes (in 2GB case).

Setting up the loop device with correct offset (2GB: 32256, 4GB: 31744):

# sudo losetup -o 32256 /dev/loop0 usb.dsk

Creating the FAT filesystem (for physical device use your partition filename such as /dev/sdd1, sdd’s first partition):

# sudo mkfs -t vfat /dev/loop0

Ignore the warnings about floppy size if you get any.

Copying necessary files

First we need to mount the filesystem (for physical device use your partition filename):

# sudo mkdir /mnt/usbdevice

# sudo mount -o uid=`id -u` /dev/loop0 /mnt/usbdevice

Copy “grldr” from grub4dos extracted archive:

# cp grub4dos-0.4.4/grldr /mnt/usbdevice

We almost finished. Your device is now bootable and boots grub4dos. We just need to configure the boot menu. Configuration file is “menu.lst”. It must be placed at the root directory of your device. You can either start with the sample file from grub4dos or use mine:

default 0

timeout 30

splashimage=(hd0,0)/splash.xpm.gz

foreground=d2d1d0

background=537ba7title Ubuntu 10.04 64bit

find –set-root /ubuntu-10.04-desktop-amd64.iso

map /ubuntu-10.04-desktop-amd64.iso (0xff)

map –hook

root (0xff)

kernel /casper/vmlinuz file=/cdrom/preseed/ubuntu.seed boot=casper persistent iso-scan/filename=/ubuntu-10.04-desktop-amd64.iso splash

initrd /casper/initrd.lztitle Ultimate Boot CD 4.11

find –set-root /ubcd411.iso

map /ubcd411.iso (hd32)

map –hook

chainloader (hd32)

In this example I only have two live operating systems: Ubuntu 10.04 64bit and Ultimate Boot CD 4.11. All the files specified should be on root directory of your device. You can find the splash image I made here, ubcd411.iso here, ubuntu-10.04-desktop-amd64.iso here.

Basically, you can use every bootable iso file you want. However, some customization might be required as you can see that the two entries aren’t identical. For better understanding of grub4dos “map” command you can use this guide. I tried using the same method (load directly from iso file) for BackTrack3, but it didn’t work. I ended up extracting BT3 directory from bt3-final.iso and put it on the root directory. I also extracted “boot” directory and put it inside BT3 directory. I then added this entry to menu.lst:

title Backtrack 3.0

root (hd0,0)

kernel /bt3/boot/vmlinuz vga=0x317 initrd=/bt3/boot/initrd.gz ramdisk_size=6666 root=/dev/ram0 rw

initrd /bt3/boot/initrd.gz

It worked. The boot params specify to load it as frame buffer console (the way I like it). Anyhow, you can get ideas for many more interesting live operating systems you can use from pendrivelinux.com, their menu.lst and splash image can be found in their source archive, MultiBootISOs-Src.zip.

Finishing up

Congrats! everything is done. Let’s close everything we opened:

# sudo umount /mnt/usbdevice

# sudo losetup -d /dev/loop0

If you want to emulate real usb device using your usb.dsk use:

# sudo modprobe g_file_storage file=usb.dsk

Wait couple of seconds and it should be recognized. To stop:

# sudo modprobe -r g_file_storage

You can also use the same file as raw hard disk with kvm. (If you don’t know how to use kvm/qemu it’s definitely not the place to explain):

# qemu-system-x86_64 -m 512 -hda usb.dsk

Pretty cool ah ?

Cool command line stuff

I made this list of cool things you can do from shell especially for desktop users. They all work on my ubuntu and most of them generic (except maybe apt-get which works for debian based distrubtions). The list is not ordered or categorized. It’s really just a bunch of things a little different from the regular text manipulation one-liners. They are all useful, at least for me.

Note: if you copy-paste notice that wordpress screws the quotes, just use the regular double quotes wherever specified.

Make cd/dvd image copy

# dd if=/dev/cdrom bs=1024k of=my_cd.iso

Make cd/dvd image copy to remote computer

I use my old computer’s drive, mine got broken:

# dd if=/dev/cdrom bs=1024k | ssh remote_computer “cat > my_cd.iso”

Mount existing cd/dvd image copy (iso file)

# mkdir /tmp/my_cd

# sudo mount -t loop my_cd.iso /tmp/my_cd

When you finish don’t forget to:

# sudo umount /tmp/my_cd

# rmdir /tmp/my_cd

Use last parameter from last command with !$

# mkdir -p really/long/path/that/you/hate/typing

# cd !$

Find all files containing a certain text

Let’s say we want to find all files under /usr/include named “*.h” containing _REGEX_H:

# find /usr/include -name “*.h” -exec grep -l “_REGEX_H” {} \;

Convert between character sets

Make sure you have “libc-bin” installed (sudo apt-get install libc-bin)

# curl -L http://www.idown.me | iconv -f windows-1255 -t utf-8

Read sent/received SMS from your jailbroken iphone

Make sure you have “sqlite3” installed (sudo apt-get install sqlite3)

# scp root@your_iphone_ip:/private/var/mobile/Library/SMS/sms.db .

# sqlite3 sms.db “select * from message”

Incrementally backup directory to external hard drive

Note: this command is dangerous as it will delete your destination dir. It’s good if you want 1:1 copy of directory and want to be able to sync only changes in future (file removal from source directory also considered change and will be replicated next time you execute). The –modify-window keeps files timestamps and useful when you sync from EXT2/3 filesystem to FAT32.

# rsync -rot –inplace –delete –progress –modify-window=2 source_dir destination_dir

Disable compiz window manager (without killing current desktop session)

# DISPLAY=$DISPLAY metacity –replace &

Enable compiz window manager (without killing current desktop session)

# DISPLAY=$DISPLAY compiz –replace &

Local port forwarding

We will listen on port 4545 and forward to local port 22 (ssh):

# mknod tmp_pipe p

# nc -kl 4545 0<tmp_pipe | nc localhost 22 1>tmp_pipe

Now you can try ssh localhost -p 4545. You can also forward to remote host, just replace localhost with the host you want.

Track your Dominos pizza order

Well, I’ve no idea if it works as it is for US citizens only, but you can check out the script here.

Ubuntu 10.04 Lucid Lynx

Today, Canonical will release Ubuntu 10.04 LTS (long term support). I’m usually not so enthusiastic about new Ubuntu releases, but this time is different. They added some sweet features, well, at least for me, which didn’t get proper public relations. This is not a comprehensive review of the new features. It’s about new features I find cool or bad enough writing about, from a desktop user point of view.

First, I must say I love Ubuntu. In each release they turn some of the most annoying tasks (for linux newbies) into trivial and intuitive. It has great documentation, community support and amazing software package repositories. It is also widely supported by third party vendors. Second, they usually add performance boosts and cool new features with new releases. Third, you can order official CDs for free. What’s not to love ?

First, I must say I love Ubuntu. In each release they turn some of the most annoying tasks (for linux newbies) into trivial and intuitive. It has great documentation, community support and amazing software package repositories. It is also widely supported by third party vendors. Second, they usually add performance boosts and cool new features with new releases. Third, you can order official CDs for free. What’s not to love ?

So, what do we get this time ? As always with ‘LTS’ releases, three years of (bug/security fixes) support. A fresh new beginner’s getting-started manual which looks very promising. Some crap as well: new look and feel and social networks integration. As if it’s that hard to change the look or use all-in-one social networks client…

Performance boosts. First, boot speed improvement. They already made a big leap from 9.04 to 9.10, and now again ? sounds delicious. “Super fast” boot for SSD based machines such as netbooks. Sounds very delicious. Second, faster suspend/resume for your netbook that will “extend battery life”. Excuse me for being skeptical, but come on… improving speeds are always good, but declaring it will save battery ? I don’t buy it.

Ubuntu One enhancements. I never got the deal around Ubuntu One. It suppose to be a personal cloud that keeps your files, notes, bookmarks and contacts on the net, but we already had these services long time ago (for example Gmail’s contacts which can be synced to your mobile, Dropbox file storage, or Delicious bookmarks). Anyway the new enhancements are better desktop integration, and new Music Store. For me, they’re both useless but I guess Canonical deserves it’s chance to fight Apple’s music store, plus, it’s DRM-free.

Ubuntu One enhancements. I never got the deal around Ubuntu One. It suppose to be a personal cloud that keeps your files, notes, bookmarks and contacts on the net, but we already had these services long time ago (for example Gmail’s contacts which can be synced to your mobile, Dropbox file storage, or Delicious bookmarks). Anyway the new enhancements are better desktop integration, and new Music Store. For me, they’re both useless but I guess Canonical deserves it’s chance to fight Apple’s music store, plus, it’s DRM-free.

Software Center 2.0. Supposedly better interface for software installation and maintenance. I haven’t seen this one yet, but it sounds just like a GUI facelift. The underlaying software deployment mechanisms stay the same (apt/ppa repositories).

The sweet features I mentioned in the prologue: inclusion of libimobiledevice in official repositories. This is a software library that supports iPhone, iPod Touch and iPad devices. Programs built on this library provides filesystem access, music/video sync, internet tethering, apps installation, springboard icon management, gnome integration, and much more ! I’ve no idea how it got such a lousy public relations but for me that’s the real killer app !

EDIT: For now only version 0.9.7 of libimobiledevice is in the repositories. It means that only music sync can be done out of the box. It’s a shame. Two weeks ago, I asked the official maintainer of the packages to make packages for 1.0.0, and I thought he told me it would be included in Lucid release, but I misunderstood him. He actually told me that it’s too close to Lucid’s release for inclusion. I apologize for the (partially) wrong information. Anyhow, one can still build 1.0.0 from sources and use it. If there would be enough demand I would write an How-To guide. Leave a comment or send mail if you’re interested.